Brain-Inspired AI 2.0: Aligning Language Models Across Languages and Modalities

🧠 Brain-Inspired AI 2.0

Aligning Language Models Across Languages and Modalities

Overview

This tutorial explores the fascinating intersection of neuroscience and artificial intelligence, focusing on how insights from human brain processing can inform and improve the development of modern language models. We will cover cutting-edge research on brain alignment, multilingual understanding, and multimodal processing, providing attendees with both theoretical foundations and practical methodologies. The rapid progress of deep neural networks (DNNs) and large language models (LLMs) has opened new opportunities to better understand the brain and to design cognitively aligned AI. This tutorial addresses the intersection of computational cognitive neuroscience (CCN) and AI, highlighting how modern deep learning techniques can be leveraged to explain, model, and enhance our understanding of brain function across modalities and languages. We begin by introducing the foundations of brain encoding (predicting brain recordings from stimulus representations) and brain decoding (reconstructing stimuli from brain activity). We then discuss recent advances on scaling laws of language models for brain alignment, multilingual and multimodal encoding, and the role of language-guided, instruction-tuned multimodal large language models in explaining task dissociation in the brain. We further cover practical pipelines for brain-informed fine-tuning of text and speech models, including the use of bilingual brain data to enhance cross-lingual semantics, and discuss improvements in semantic versus syntactic representations. Additionally, we cover interpretability and causal testing, when models act as drivers of neural activity and where they diverge from human processing. Under brain decoding, we present case studies include semantic reconstruction from continuous stimuli and image/video reconstruction using latent diffusion models. We conclude with open challenges and research directions toward cognitively aligned, brain-informed AI. ## Learning Objectives

- Understand the fundamental concepts of brain-AI alignment and encoding models

- Learn methodologies for comparing brain activity with model representations

- Gain insights into multilingual processing in both human brains and language models

- Explore multimodal integration mechanisms across vision, language, and audio

- Master techniques for brain-informed model fine-tuning and evaluation

- Discover practical applications and future research directions in Neuro-AI

Target Audience

This tutorial is designed for researchers and practitioners with: - Basic understanding of deep learning and neural networks - Familiarity with transformer-based language models - Interest in interdisciplinary approaches to AI Our material assumes a basic knowledge of machine learning (e.g., foundations of linear models, regression, basic probability, and algebra) and deep learning, including familiarity with concepts such as word embeddings, Transformer models, and backpropagation. Some exposure to cognitive science or neuroscience, as well as programming experience in Python with libraries like PyTorch or scikit-learn, will also be helpful, though not strictly required.Tutorial Schedule

| Time | Topic | Speaker |

|---|---|---|

| 8:30 - 8:40 |

Introduction to Tutorial and Schedule |

Subba Reddy Oota |

| 8:40 - 9:30 |

Introduction to Brain Encoding and Decoding • Intro to Brain Encoding/Decoding and applications • Alignment Between AI Models and Human Brain Language Comprehension |

Manish Gupta |

| 9:30 - 10:30 |

Brain Encoding: Scaling Laws, Multilinguality, Multimodal and Instruction-tuned Models • Scaling Laws in fMRI Encoding • Multilingual Brain Encoding • Multimodal Brain Alignment (Unimodal and Multimodal stimuli) • Instruction-tuned MLLMs and Brain Alignment |

Subba Reddy Oota |

| 10:30 - 11:00 | Coffee Break & Networking | - |

| 11:00 - 11:30 |

Brain-informed Fine-tuning of Language Models • Text-based Language Models + Monolingual Brain Data • Speech-based Language Models + Monolingual Brain Data |

Subba Reddy Oota |

| 11:30 - 11:50 |

Brain-based Interpretability and Causal Testing of AI Models • Language models as causal drivers of neural activity • Divergence between language models and human brains |

Tanmoy Chakraborty |

| 11:50 - 12:20 |

Brain Decoding • Semantic reconstruction of continuous language from non-invasive brain recordings • Visual reconstruction from non-invasive brain recordings |

Bapi S. Raju |

| 12:20 - 12:30 |

Summary and Future Trends • Open challenges and research directions • Q&A and Discussion |

Bapi S. Raju |

Instructors

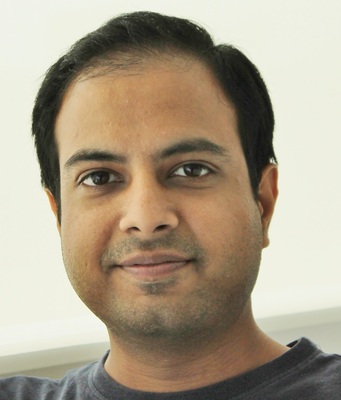

Dr. Subba Reddy Oota

Postdoctoral Researcher

TU Berlin, Germany

Dr. Subba Reddy Oota is a Postdoctoral Researcher at TU Berlin, Germany, and completed his Ph.D. at Inria Bordeaux, France. His research focuses on language analysis in the brain, brain encoding/decoding, multimodal information processing, and interpreting AI models. He has published at top venues including NeurIPS, ICLR, ACL, EMNLP, NAACL, INTERSPEECH, and TMLR.

🏆 SAC Highlight Award at EMNLP 2025

🏆 NeurIPS-2023 Scholar Award

📄 20+ publications at A*/A conferences

Prof. Tanmoy Chakraborty

Associate Professor

IIT Delhi, India

Prof. Tanmoy Chakraborty is an Associate Professor in the Department of Electrical Engineering at IIT Delhi, India. His research spans NLP, graph representation learning, computational social science, and AI for mental health. He has 200+ publications in venues like NeurIPS, ICLR, ICML, AAAI, ACL, EMNLP, and Nature Machine Intelligence.

📊 200+ publications at top-tier venues

🎯 PC Co-chair of EMNLP 2025

Dr. Manish Gupta

Principal Applied Researcher

Microsoft, India

Dr. Manish Gupta is a Principal Applied Researcher at Microsoft, India, and visiting faculty at ISB. His research interests are in the areas of deep learning, web mining, NLP and computational neuroscience. He has published over 200 papers in leading conferences and journals, including ICLR, ICML, NeurIPS, AAAI, ACL, NAACL, EMNLP and TMLR.

🏢 Principal Applied Researcher at Microsoft

📚 200+ publications at premier venues

Prof. Raju S. Bapi

Professor & Lab Head

IIIT Hyderabad, India

Prof. Raju S. Bapi is a Professor and Head of the Cognitive Science Lab at IIIT Hyderabad, India. He previously worked as an EPSRC Research Fellow at the University of Plymouth, UK, and as a Researcher at ATR Labs, Japan. He has published in leading conferences and journals including ICLR, EMNLP, MICCAI, NeuroImage, and Nature Scientific Reports.

🧪 Head of Cognitive Science Lab at IIIT Hyderabad

🌍 International experience (UK, Japan, India)

Tutorial Materials

📚 Resources

All tutorial materials will be made available before the conference and will remain accessible afterwards.

Key Papers & References

This tutorial draws from cutting-edge research including:

Brain Encoding & Alignment

-

- Interpreting and improving natural-language processing (in machines) with natural language-processing (in the brain)

NeurIPS 2019

Toneva & Wehbe 2019. [PDF] -

- The neural architecture of language: Integrative modeling converges on predictive processing

PNAS 2021

Schrimpf et al. 2021 [PDF] -

- Language processing in brains and deep neural networks: computational convergence and its limits

Nature Communications Biology 2022

Caucheteux & Jean-Rémi 2022. [PDF] -

- Scaling laws for language encoding models in fMRI

NeurIPS 2023

Antonello et al. 2023 [PDF] [code] -

- Joint processing of linguistic properties in brains and language models

NeurIPS 2023

Oota et al. 2023 [PDF] -

- Speech language models lack important brain-relevant semantics

ACL 2024

Oota et al. 2024 [PDF] -

- Deep Neural Networks and Brain Alignment: Brain Encoding and Decoding (Survey)

TMLR 2025

Oota et al. 2025 [PDF]

Multimodal Processing

-

- Correlating instruction-tuning in multimodal models with vision-language processing in the brain

ICLR 2025

Oota et al. 2025[PDF] -

- Multi-modal brain encoding models for multi-modal stimuli

ICLR 2025

Oota et al. 2025[PDF] -

- Vision-Language Integration in Multimodal Video Transformers (Partially) Aligns with the Brain

Arxiv 2023

Dong et al. 2023[PDF] -

- Brain encoding models based on multimodal transformers can transfer across language and vision

NeurIPS 2023

Tang et al. 2023[PDF]

Brain-Informed Models

-

- Brain-Informed Fine-Tuning for Improved Multilingual Understanding in Language Models

NeurIPS 2025

Negi et al.[PDF] -

- Improving Semantic Understanding in Speech Language Models via Brain-tuning

ICLR 2025

Moussa et al.[PDF] -

- BrainWavLM: Fine-tuning Speech Representations with Brain Responses to Language

Arxiv 2025

Vattikonda et al.[PDF]

Applications & Impact

Research Applications

- Developing more human-like AI systems

- Understanding model interpretability through neuroscience

- Designing better evaluation metrics

- Cross-modal learning and transfer

Industry Applications

- Enhanced multilingual systems for global markets

- Improved human-AI interaction

- Brain-inspired architectures for edge devices

- Cognitive load optimization in interfaces

Tutorial History

Earlier versions of this tutorial have been presented at major conferences such as IJCAI, IJCNN and CogSci. The current version offers an advanced and updated perspective, incorporating recent developments in large language models (LLMs), multimodal LLMs, and their alignment with brain data.

- - Subba Reddy Oota, Bapi Raju Surampudi. Language and the Brain: Deep Learning for Brain Encoding and Decoding. CoDs-COMAD 2024.

- - Subba Reddy Oota, Manish Gupta, Bapi Raju Surampudi, Mariya Toneva. Deep Neural Networks and Brain Alignment: Brain Encoding and Decoding. IJCAI 2023. [Video]

- - Subba Reddy Oota, Manish Gupta, Bapi Raju Surampudi. Language and the Brain: Deep Learning for Brain Encoding and Decoding. IJCNN 2023.

- - Subba Reddy Oota, Jashn Arora, Manish Gupta, Bapi Raju Surampudi, Mariya Toneva. Deep Learning for Brain Encoding and Decoding. CogSci 2022. [Video]

Prerequisites

Technical Background:

- Familiarity with basic algebra

- Familiarity with PyTorch or TensorFlow

- Basic knowledge of transformers (BERT, GPT, etc.)

- Understanding of basic machine learning concepts

No Prior Neuroscience Background Required!

- All computational cognitive neuroscience concepts will be introduced from scratch

- Intuitive explanations with visual brainmaps

- Focus on the bridging between two systems

Registration & Contact

Tutorial Registration: Registration will be through the main AAAI-2026 conference website.

Questions? Contact the instructor:

- Email: subbareddyoota@gmail.com

- Twitter: @subbareddy300

- GitHub: @subbareddy248

Stay Updated

Follow for updates on:

- Tutorial materials release

- Additional resources

- Post-tutorial discussions

🎯 Why Attend This Tutorial?

- Cutting-Edge Research: Learn about the latest advances in brain-AI alignment from 2023-2025

- Interdisciplinary Insights: Bridge neuroscience and AI for novel research directions

- Networking: Connect with researchers at the forefront of Neuro-AI

- Career Development: Open doors to emerging opportunities in brain-inspired AI

Looking forward to seeing you at AAAI 2026! 🧠🤖